Here I’d like to share some experience (mostly lessons) searching for a job in the tech industry over the last couple of months. It’s no longer secret that in 2025, the tech job market is pretty tough. I also hope my experience can be somewhat useful to you.

Building a School Library Assistant with FastMCP and DSPy (II)

The last time I dived into building a toy MCP service for a school library assistant, which helps you search for books, borrow copies, and return them. I quickly realized this wasn’t practical. Without authentication and authorization, the service was only useful for an administrator with full access.

What if we want to expose a real service? Security needs to be a first-class citizen.

MCP service itself is not that mysterious. It’s essentially built upon HTTP protocol with an extra layer of protocol and formatting. We can leverage all the concept for building security for HTTP services, like TLS, OAuth, etc. With the FastMCP framework we can extend the Authn/Authz, just like the Python backend development process.

This is a toy implementation, but I think can demo the basic ideas for MCP service and LLM integration.

Code here: https://github.com/hxy9243/agents/tree/main/librarian

See last blog: https://blog.kevinhu.me/2025/08/09/Building-MCP-with-DSPy/

Building a School Library Assistant with FastMCP and DSPy (I)

Recently I’ve been looking into MCP and how it integrates with development framework. I tried with some hands-on tinkering, and learned quite a bit. Here’s my learning and some reflections.

Code here: https://github.com/hxy9243/agents/tree/main/librarian

What’s MCP

Bird’s Eye View

MCP (Model Context Protocol) is a standard way for AI agents to securely connect to your services. It provides a uniform interface for tools, resources, and prompts, enabling seamless integration between AI systems and external services.

Clients like VSCode, Gemini Code, or Claude Code can now integrate with different tools and services and become incredibly powerful, from reading PDFs, search for websites, read your Google Drive files, or book plane tickets for you. You could implement the MCP service once and easily install in all kinds of the AI assistants of your choice.

With this standardization, you can implement your MCP server and they can directly integrate with your user’s agents, assistants, or more complicated workflows. They can call and leverage your tools with a standardized interface.

Think of an AppStore moment for app discovery and AI integration.

Learning AI Agent Programming (with DSPy)

Just realized that I haven’t written down any blogs for about a year. There’s much good learning and experience over the last year that I didn’t get a chance to write it down. It’s a good time to get dust off this blog and get back to the writing habit again.

A Dive Into AI Agent Programming

Recently I got really interested in AI Agent programming. And I spent some time in diving into understanding DSPy. At first I was a little perplexed by starting to read its papers (which focused more on program optimization than actual programming). But once I understood its design I immediately fell in love with it, as its design philosophy resonated with me very strongly.

You can specify and start writing an LLM agent program right away with just a few lines of Python:

1 | sentence = "it's a charming and often affecting journey." # example from the SST-2 dataset. |

(Examples from https://dspy.ai/learn/programming/signatures/.)

And I can quickly craft a few agents and combine them together to make an agentic workflow.

Making a Toy Gradient Descent Implementation

1 Introduction

I’ve recently came across a few of Andrej Karpathy‘s video tutorial series on Machine Learning and I found them immensely fun and educational. I highly appreciate his hands-on approach to teaching basic concepts of Machine Learning.

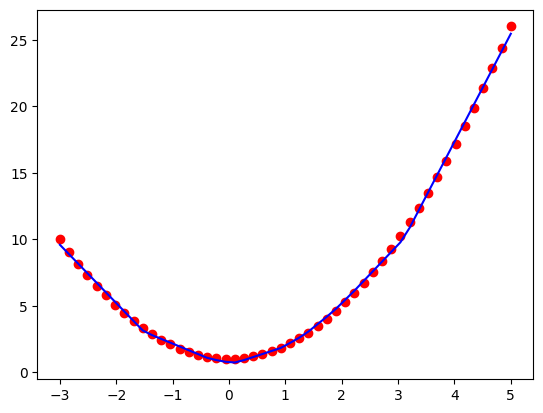

Richard Feynman once famously stated that “What I cannot create, I do not understand.” So here’s my attempt to create a toy implementation of gradient descent, to better understand the core algorithm that powers Deep Learning after learning from by Karpathy’s video tutorial of micrograd:

https://github.com/hxy9243/toygrad

Even though there’s a plethora of books, blogs, and references that explains the gradient descent algorithm, it’s a totally different experience when you get to build it yourself from the scratch. During this course I found there are quite a few knowledge gaps for myself, things that I’ve taken for granted and didn’t really fully understand.

And this blog post is my notes during this experience. Even writing this post helped my understanding in many ways.

Paper Readings on LLM Task Performing - II

1 Overview

Previously: Paper Readings on LLM Task Performing

I’m still combing through the papers on LLM and I’ll summarize my readings here on this blog. There’s an increasing amount of interest and attention in this field and hence there will be many more papers in the forseeable future. Hopefully I can squeeze more time to read and share my experience with all of you.

Hacking LangChain For Fun and Profit - I

1 Overview

Recently I’ve looked into the LangChain project and I was surprised by how it could be such a powerful and mature a project built in such short span of time. It covers many essential tools for creating your own LLM-driven projects, abstracting cumbersome steps with only a few lines of code.

I like where the project direction is going, and the development team has been proactively including and introducing new ideas of the latest LLM features in the project.

The path to understanding this new project weren’t really smooth. It has its own opinions for code organization and it could be unintuitive to guess how to hack your own projects for more than the tutorials. Many of the tutorials out there explains how to create a small application with LangChain but doesn’t cover how to intuitively comprehend the abstraction and design choices.

Hence I have taken the initiative to document my personal cognitive process throughout this journey. By doing so, I aim to clarify my own understanding while also providing assistance to y’all who are interested in hacking LangChain for fun and profit.

This blog post will dedicate to the overall understanding of all the concepts. I found it really helpful to start by understanding the concepts that directly interacts with the LLM, especially the core API interfaces. Once you have the mindmap of all the LangChain abstractions, it’s much more intuitive to hack and extend your own implementation.

Paper Readings on LLM Task Performing

1 Overview

I’ve spent the last couple of months reading about the development of AI and NLP development in general ever since the release of ChatGPT. And here’s some of my personal findings specifically on task performing capabilities.

The field of AI has been advancing rapidly, and the results have exceeded expectations for many users and researchers. One particularly impressive development is the Language Model (LLM), which has demonstrated a remarkable ability to generate natural, human-like text. Another exciting example is ChatGPT from OpenAI, which has shown impressive task performing and logical reasoning capabilities.

Looking ahead, I am optimistic that LLM will continue to be incredibly effective at performing more complex tasks with the help of plugins, prompt engineering, and some human input/interactions. The potential applications for LLM are vast and promising.

I’ve compiled a list of papers of extending the task performing capabilities in this field. I’m quite enthusiastic and excited about the potential of longer term of this capability that brings LLMs like ChatGPT to more powerful applications.

Here’s my first list of paper, also what I consider to be more fundamental papers, along with my very quick summaries.

OpenAPI Generator For Go Web Development

The Openapi Generator for Go API and Go web app development works surprisingly well, but somehow I found that it’s not so often mentioned. Recently I’ve tried it in one of my projects, and in my (limited) experience with it, I was pleasantly surprised by how good it was. With some setup, it could generate Go code with decent quality, and it’s fairly easy to use once you get a hang of it. Whether you’re building a standalone web-app from scratch or creating a service with REST API endpoints, openapi-generator might come up handy for you.

Using a generator might save much time to kick-start your web app project. And most importantly, I found that a good, well-defined, consistent API definition is so crucial to your development, testing, and most importantly, communication among teams and customers. I highly recommend that for any sizable project, you spend some quality time on writing a good API spec. It’ll become essential to your development workflow. I used to highly doubt this, and now I don’t think I can live without it.

And if you manually keep documentation, or API specifictions in sync with your Go code, you’ll have a hard time reviewing, checking,

and testing between code and specs. The best way IMHO is to automate the process, by either generating the API code from spec, or the other around.

Many toolings support either one of these, and openapi-generator is one of the really nice tools that I’m going to introduce in this blog post.

Openapi Generator supports many languages on the server as well as on the client side.

And it has generator for different frameworks of Go. Right here I’m going to use go-server generator

as an example.

It uses the Gorilla framework for the server-side code.

For this blog post I’ve also made an example of code generation in my Github repo. I’ve generated the code,

and implemented only one endpoint /books with example data:

https://github.com/hxy9243/go-examples/tree/main/src/openapi

Building Applications With Cassandra: Experience And Gotchas

Recently I’ve summarized some experience on quickly getting started with Cassadra. And for this post I’d like to keep writing about some of our experience using and operating Cassandra. Hopefully it could be useful to you, and help you avoid future unwanted surprises.

Election and Paxos

Cassandra is always considered to be favoring the “AP” in “CAP” theorem, where it guarantees eventual consistency for availability and performance. But when really necessary, you can still leverage Cassandra’s built-in “Light-weight Transaction” for elections to determine a leader node in the cluster.

Basically, it works by writing to a table with your own lease:

1 | INSERT INTO leases (name, owner) VALUES ('lease_master', 'server_1') IF NOT EXISTS; |

The IF NOT EXISTS triggers the Cassandra built-in Light-weight Transaction and can be used to declare a consensus among a cluster. With a default TTL in the table, this can be used for leases control, or master election. For example:

1 | CREATE table leases ( |

So that the lease owner needs to keep writing to the lease row for heartbeats.

I’m not sure about the performance characteristics of Cassandra’s election behavior with other applications (etcd, Zookeeper, …) and it’ll be interesting to see a study. But since those are already more full-featured and well-understood in keeping consensus, I’d recommend delegating this behavior to them unless you’re stuck with Cassandra for your application.